//Dreamgazer

Rough Copies - Old Research Documentation Site

Intro

Dreamgazer so far embodies a series of projects and research experiments I have been undertaking since my later years of high school. These works include:

A several month long journey in high school to produce a illustrated dream journal containing experiment documentation and dream analyses, to show the progression of lucid dream training (2011)

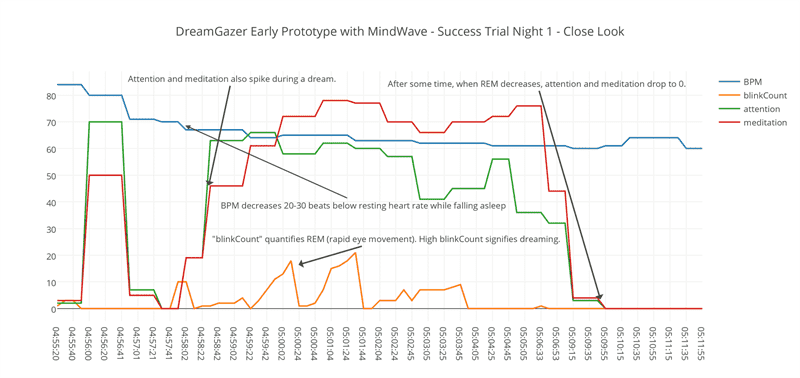

A brain-computer interface prototype (built by hacking apart the NeuroSky MindWave) able to successfully detect when someone fell asleep within a period of a few minutes, and also able to induce lucid dreaming within myself by playing a trained musical trigger during the appropriate dreaming stages of REM (2015)

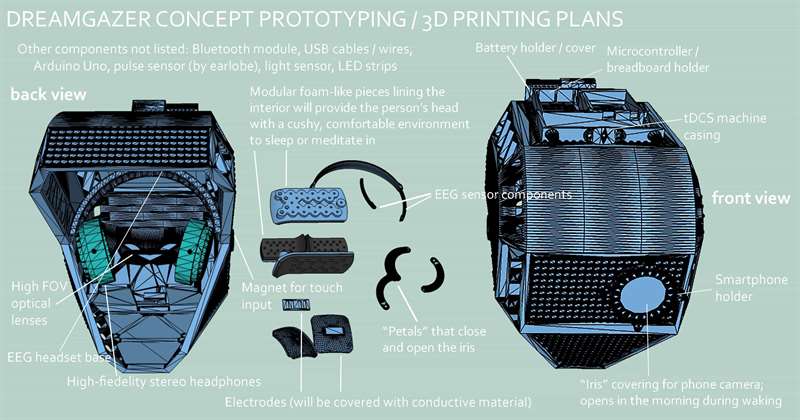

A 3D printable wearable device with various sensors, electrodes, and a VR interface meant to induce the "ultimate" lucid dreams (2017)

The dreaming machine

In 2015, I made a wearable prototype to induce lucid dreaming using an Arduino, a pulse sensor, the NeuroSky MindWave (EEG), and Processing (for software). I was able to detect when I fell asleep within an accuracy of just a few minutes, and was able to successfully induce lucid dreaming by playing music during detected stages of REM sleep (by measuring eye movement after sleep).

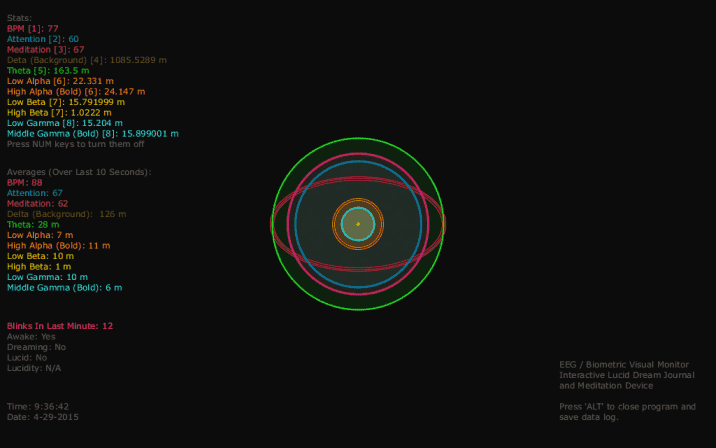

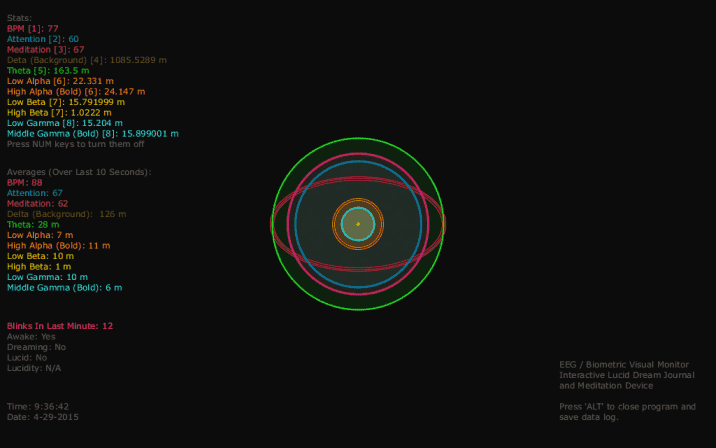

Image still from an abstract visual recording of a dream by tracking biometric patterns (eye movement, heart rate, and EEG waves)

Image still from an abstract visual recording of a dream by tracking biometric patterns (eye movement, heart rate, and EEG waves)

I chose to use Processing because it already had compatible libraries with all the open-source hardware I was using, and because of Processing's simple and immediate way of writng animations. The visualization of dreams through biometric and EEG data was something I really wanted to nail.

Video demonstration of wearable prototype in use, with software recording dream in real-time and playing musical triggers during REM sleepThe Results

I was able to detect when I fell asleep within an accuracy of five minutes, and was able to successfully induce lucid dreaming by playing music when REM sleep was detected.

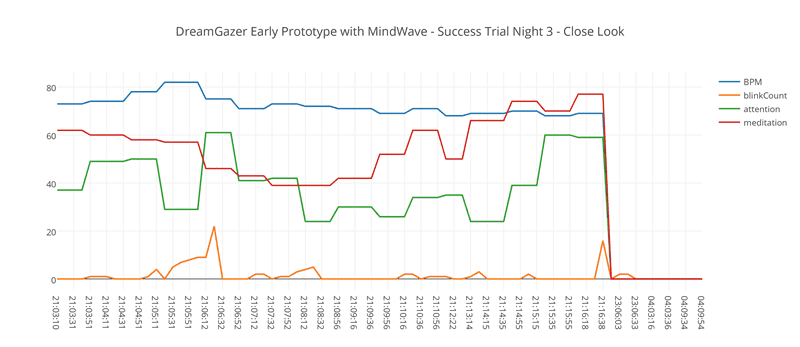

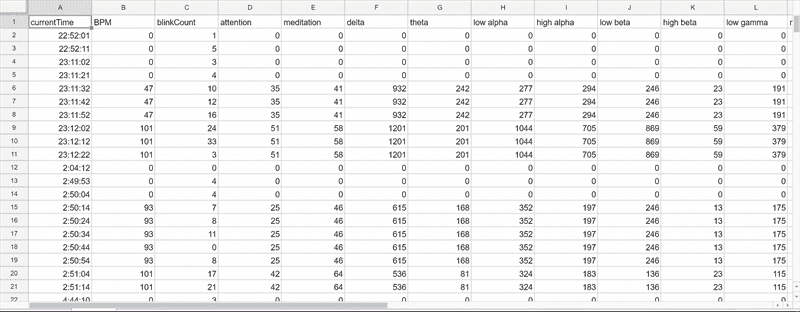

I used Processing to visualize my dreams and record my biometric data over the course of several different nights. The data was automatically fed into a spreadsheet for easy analysis.

You can see and use the data yourself here (if the data does turn out to be useful for people, I'll go back and separate it into each night to organize).

Below you'll see annotated graphs demonstrating the success of the device, recording biometric and EEG trends corresponding with points of interest throughout a night of dreaming.

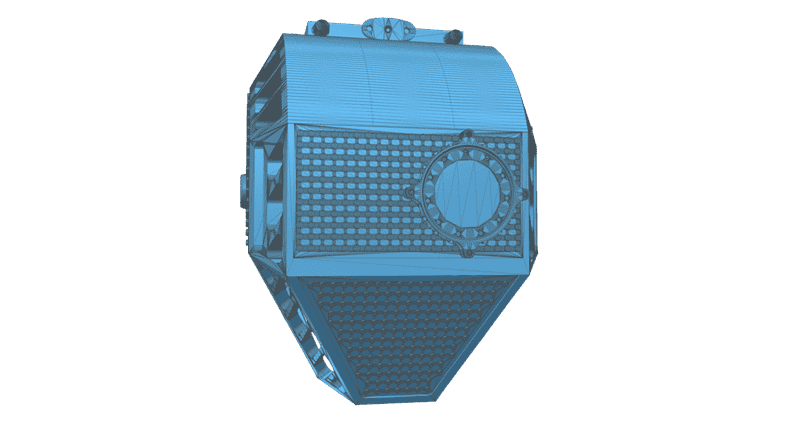

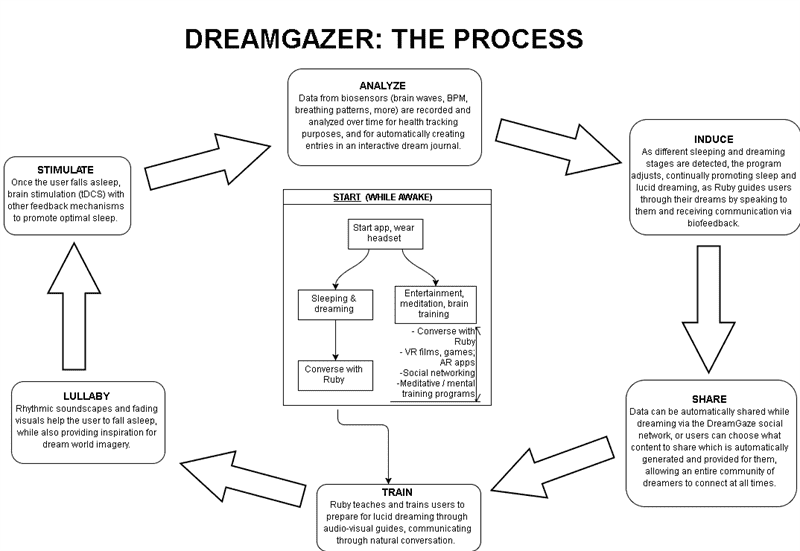

The future concept

The name Dreamgazer refers specifically to a mostly conceptualized, combined VR / AR, EEG, and tDCS (transcranial direct current stimulation) device designed to be 3D printed and then hand-assembled. Dreamgazer's software was planned to be integrated with an AI program (Emoter) that would train users to dream, and guide them through their dreams vocally by talking and responding to biofeedback.

The plans were made to be functional, and hand-assembled with proper construction and fabric materials.

The plans were made to be functional, and hand-assembled with proper construction and fabric materials.

The plans were made to be functional, and hand-assembled with proper construction and fabric materials.

The plans were made to be functional, and hand-assembled with proper construction and fabric materials.

The full step-by-step process of Dreamgazer's proposed functionality.

The full step-by-step process of Dreamgazer's proposed functionality.

Lucid dreaming in wearables and biotechnology is something I will continue exploring. I have plans to further develop and refine new iterations of Dreamgazer, and would like to be able to open-source the hardware and software.

.png)